1.1 Understanding Hamming Distance

1.1.1 What is the Hamming distance between two binary strings?

The Hamming distance of two code words can be calculated as the number of 1 bits in the bitwise exclusive-or of the two code words. For example, the Hamming distance between the code words 1001 and 0101 is:

1001

0101 (XOR)

----------------

1100

----------------

Since there are two 1's in the output the Hamming Distance is 2.

1.1.2 How does error detection map to Hamming Distance?

This is what I understand:

Number of errors that can be detected = Minimum Hamming Distance between any two code words |

For calculating minimum Hamming distance you can take any two adjacent code words.

For calculating maximum Hamming distance you should take the first and last code word in the list.

1.1.2.1 Example with 3 bit code words

Data Word | Even Parity | Code Word |

00 | 0 | 000 |

01 | 1 | 011 |

10 | 1 | 101 |

11 | 0 | 110 |

Minimum Hamming Distance = 2

Therefore number of errors that can be detected = 2

Maximum Hamming Distance = 2

Given any two codes in the above list, you need two at least two bit flips to convert one code to another. Therefore this coding approach can detect up to two errors.

1.1.2.2 Example with 4 bit code words

Data Word | Even Parity Bit | Code Word |

000 | 0 | 0000 |

001 | 1 | 0011 |

010 | 1 | 0101 |

011 | 0 | 0110 |

100 | 1 | 1001 |

101 | 0 | 1010 |

110 | 0 | 1100 |

111 | 1 | 1111 |

Minimum Hamming Distance = 2

Maximum Hamming Distance = 4

This coding scheme can detect up to 2 errors

We can generalize saying that when using Parity, the minimum Hamming distance will always be equal to 2.

To detect d single-bit errors, a code must have a minimum Hamming distance of at least d + 1.

To correct d single-bit errors, a code must have a minimum Hamming distance of at least 2d + 1.

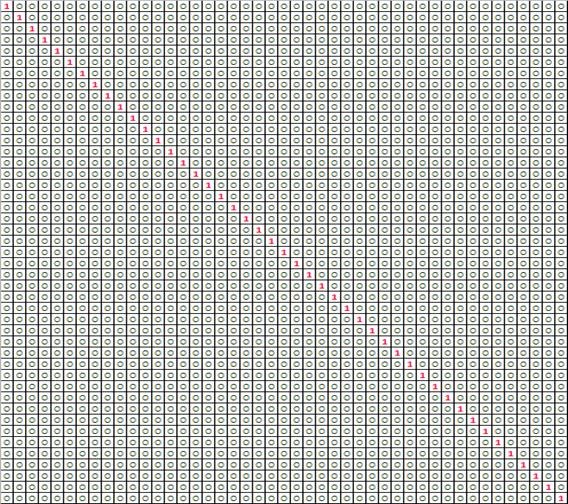

1.2 Working out the Hamming Code

1.3 The Internet Checksum

RFC | Title | Description |

1071 | Computing the Internet Checksum |

|

1141 | Incremental Updating of the Internet Checksum |

|

1624 | Computation of Internet Checksum via Incremental Update |

|

Let us say we want to send the following 64 bit data stream:

Binary | 0000000000000001 1111001000000011 1111010011110101 1111011011110111 |

Hexadecimal | 0001 F203 F4F5 F6F7 |

Sender - Checksum computation

Step | Description | Variable Name | Binary Representation | Hexadecimal Representation |

1 | Arrange the 64 bit data as 16 bit words - one below the other | First Byte | 0000000000000001 | 0001 |

Second Byte | 1111001000000011 | F203 | ||

Third Byte | 1111010011110101 | F4F5 | ||

Fourth Byte | 1111011011110111 | F6F7 | ||

2 | Put all zeroes in the checksum position | Checksum Byte | 0000000000000000 | 0000 |

3 | Add all the above numbers | Sum with carryover | 101101110111110000 (The 10 on the left is the carryover of the addition) | 2DDF0 |

4 | Take the carryover bits from the number and add them back | 16 bit Sum | 1101110111110000 1101110111110010 | DDF2 |

5 | Negate the 16 bit Sum | Checksum | 0010001000001101 | 220D |

6 | Append the Checksum to the original data and transmit. The length of the data transmitted is 64+16 = 80 bits | Final Code | 0000000000000001 1111001000000011 1111010011110101 1111011011110111 0010001000001101 | 0001 F203 F4F5 F6F7 220D |

Receiver - Checksum Validation

Step | Description | Variable Name | Binary Representation | Hexadecimal Representation |

1 | Arrange the received data into 16 bit words - one below the other | First Byte | 0000000000000001 | 0001 |

Second Byte | 1111001000000011 | F203 | ||

Third Byte | 1111010011110101 | F4F5 | ||

Fourth Byte | 1111011011110111 | F6F7 | ||

Checksum Byte | 0010001000001101 | 220D | ||

2 | Add all the above numbers | Sum with carryover | 101111111111111101 | 0000 |

3 | Take the carryover bits from the number and add them back | 16 bit Sum | 1111111111111101 1111111111111111 | DDF2 |

4 | Negate the 16 bit Sum | Checksum Result | 0000000000000000 | 0000 |

The same algorithm is applied at the receiver as well.

It can correct burst errors (errors in many consecutive bits rather than occurring independently of each other) up to 16 bits.

For 16 bit checksum there are 216 possible values. So the error detection is going to be much more efficient that Parity.

It is easy for the programmers to see what is going on inside a computer

http://www.youtube.com/user/numberphile/

Octal Representation | Binary Representation | UNIX file permissions |

0 | 000 | No Permission |

1 | 001 | Execute Only |

2 | 010 | Write Only |

3 | 011 | Write and Execute Only |

4 | 100 | Read Only |

5 | 101 | Read and Execute Only |

6 | 110 | Read and Write Only |

7 | 111 | Read, Write and Execute |

2 Cyclic Redundancy Check

CRC is based on the mathematics of finite fields. It is difficult for us to work out the generator to be used because it has been done by people for years!

What are the problems of using a checksum?

1. If the checksum itself is corrupted, a correctly transmitted message might be incorrectly identified as a corrupted one. However, this is a safe-side failure.

2. A dangerous-side failure occurs where the message and/or checksum is corrupted in a manner that results in a transmission that is internally consistent.

Unfortunately, this possibility is completely unavoidable and the best that can be done is to minimize its probability by increasing the amount of information in the checksum (e.g. widening the checksum from one byte to two bytes).

What is a CRC algorithm?

CRC algorithms, which fall into the class of error detection algorithms that leave the data intact and append a checksum on the end.

The basic idea of CRC algorithms is simply to treat the message as an

enormous binary number, to divide it by another fixed binary number,

and to make the remainder from this division the checksum. Upon

receipt of the message, the receiver can perform the same division and

compare the remainder with the "checksum" (transmitted remainder).

Why division is better than addition?

Although the effect of each bit of the input message on the quotient

is not all that significant, the 4-bit remainder gets kicked about

quite a lot during the calculation, and if more bytes were added to

the message (dividend) it's value could change radically again very

quickly. This is why division works where addition doesn't.

CRC-1 | most hardware; also known as parity bit |